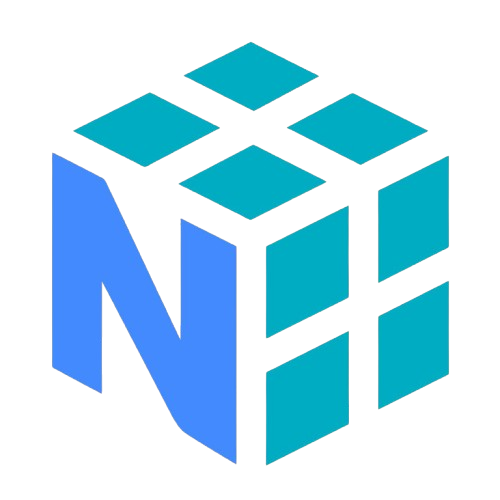

The Perception-Action Loop

A Mac Mini captures the screen, sends it to Claude Opus 4.6 via API, receives action decisions, and executes them with human-like input simulation — all running autonomously.

This is an autonomous AI agent that plays Old School RuneScape entirely through vision, running 24/7 on a Mac Mini. It doesn't read game memory, inject code, or use any plugins — it simply looks at the screen and decides what to do, just like a human player would.

Every few seconds, the Mac Mini captures a screenshot of the game and sends it to Claude Opus 4.6 (Anthropic's most powerful AI model), which returns a structured decision: what it sees, what it thinks, and what actions to take. Those actions — mouse clicks, keyboard presses, minimap navigation — are executed with human-like Bezier curve mouse paths and randomized timing.

The agent has persistent memory powered by a vector database. It remembers where it's been, what worked, what failed, and what killed it. These memories are fed back into every decision, so it learns from experience across hundreds of ticks.

Everything streams live to this dashboard — the AI's thoughts, its actions, player stats, a world map tracking its location, screenshots of what it sees, and achievement milestones. You're watching an AI explore Gielinor in real time.

Screenshot

Capture the game window

LLM Vision

Claude Opus 4.6 analyzes the scene

Decision

Choose actions from 30+ types

Execution

Human-like Bezier mouse paths

Memory

Store experience in vector DB

Repeat

Loop forever, learn always